News

Selected Papers

You can find all papers in my google scholar page.

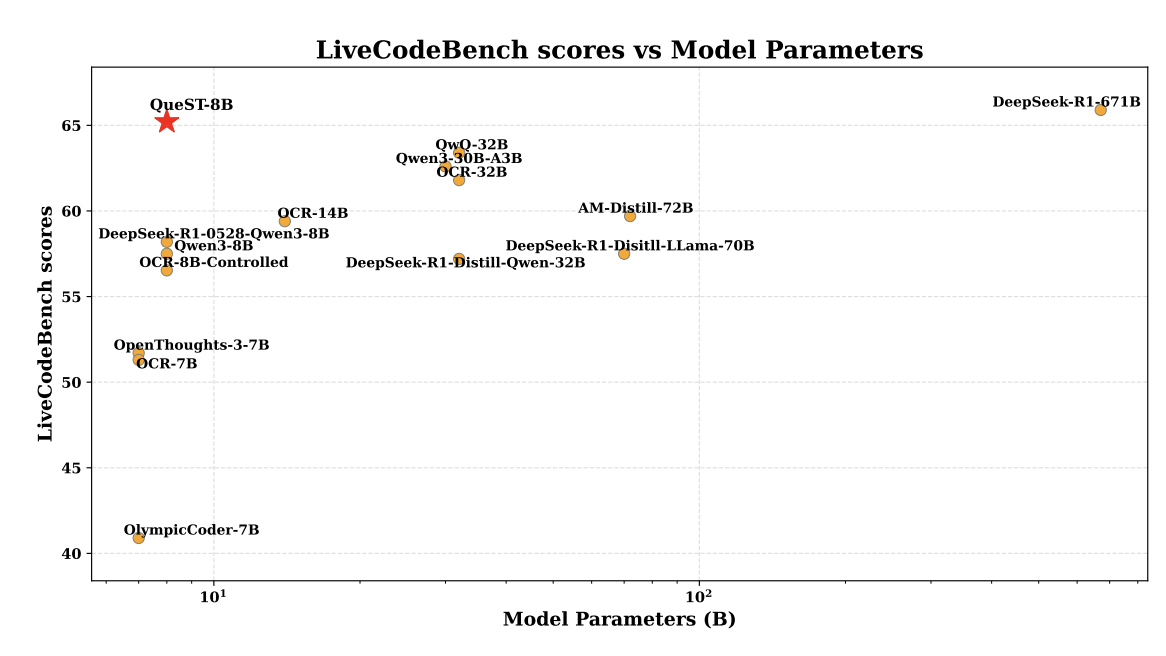

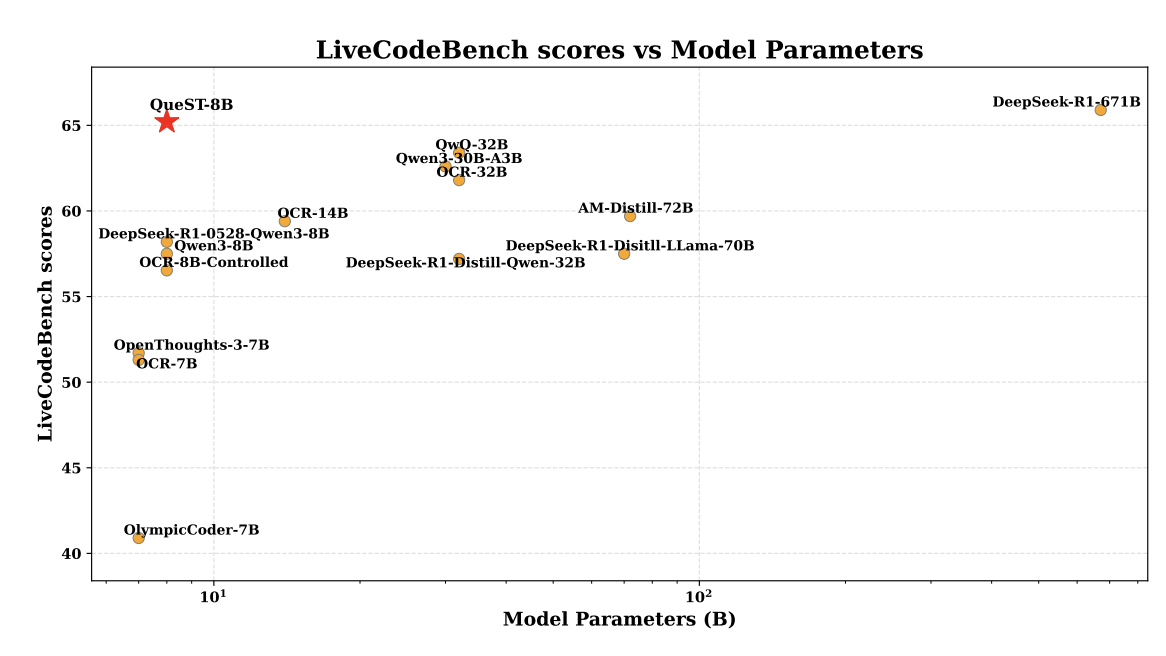

QueST: Incentivizing LLMs to Generate Difficult Problems

Hanxu Hu, Xingxing Zhang, Jannis Vamvas, Rico Sennrich, and Furu Wei

Preprint

arXiv

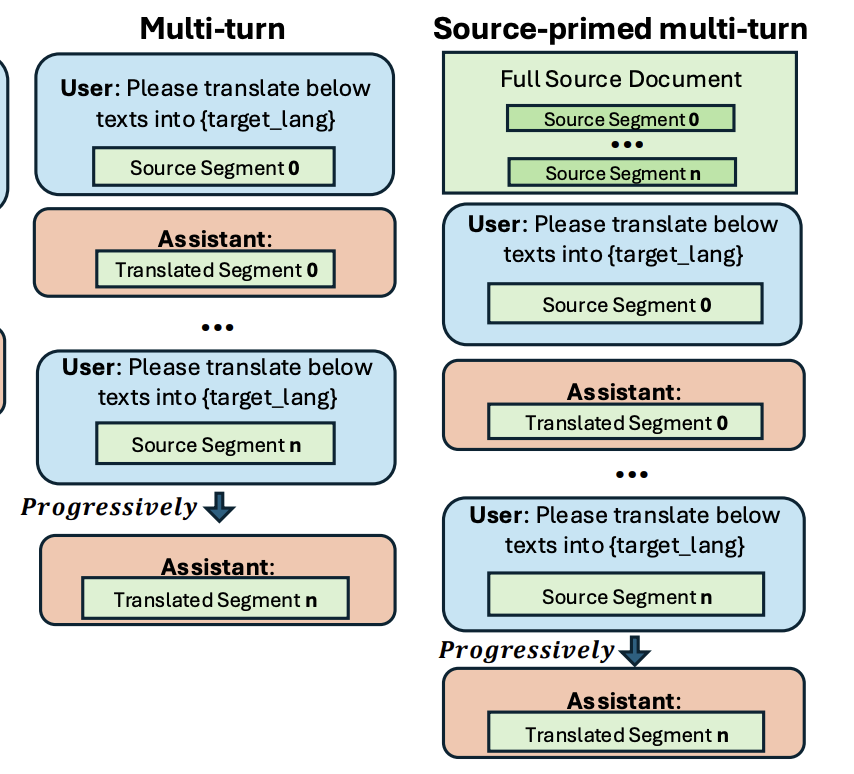

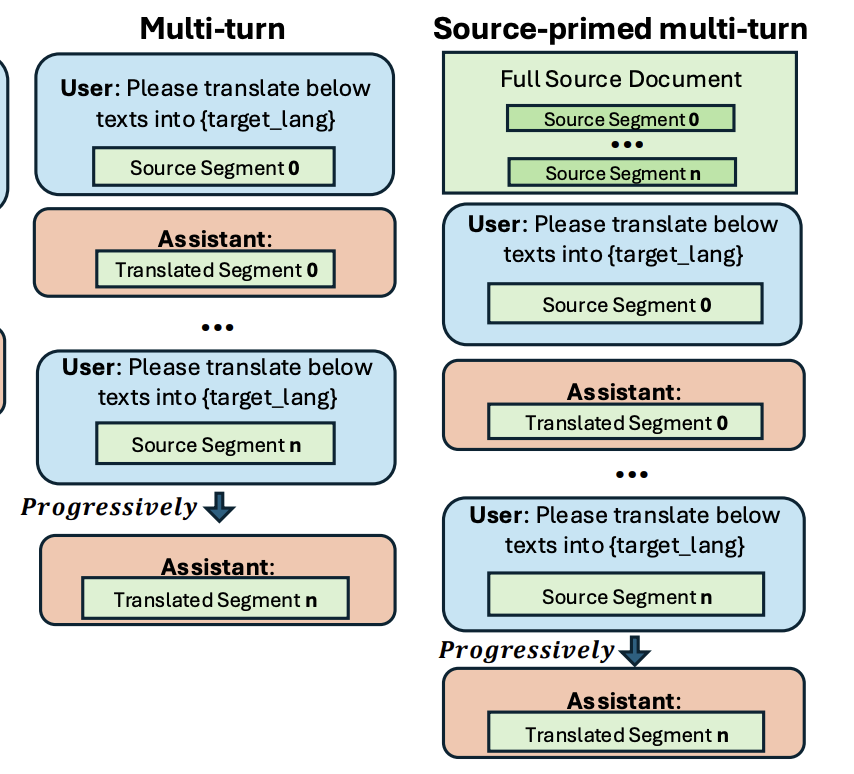

Source-primed Multi-turn Conversation Helps LLMs Translate Documents

Hanxu Hu, Jannis Vamvas, and Rico Sennrich

EMNLP 2025 Findings

Code / arXiv

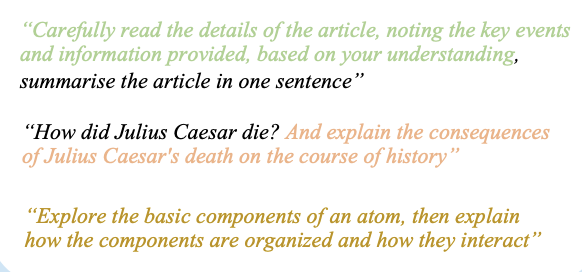

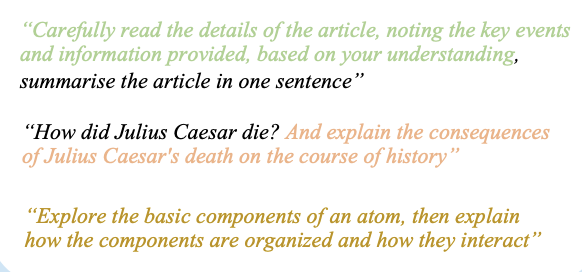

Fine-tuning Large Language Models with Sequential Instructions

Hanxu Hu*, Simon Yu*, Pinzhen Chen*, Edoardo M. Ponti

NAACL 2025 Main

Project Page / arXiv

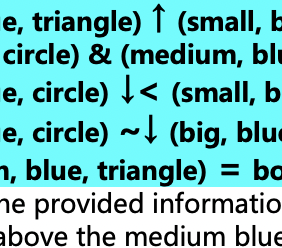

Chain-of-Symbol Prompting For Spatial Reasoning in Large Language Models

Hanxu Hu*, Hongyuan Lu*, Huajian Zhang, Yun-Ze Song, and Yue Zhang

COLM 2024

Code / arXiv

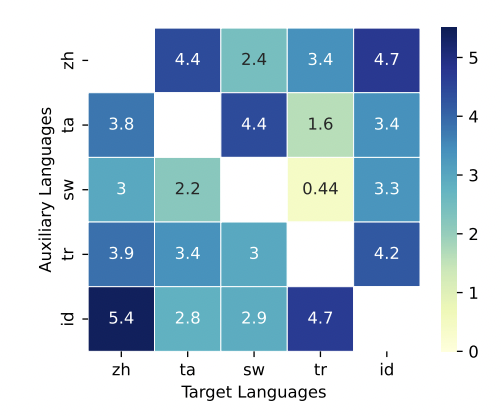

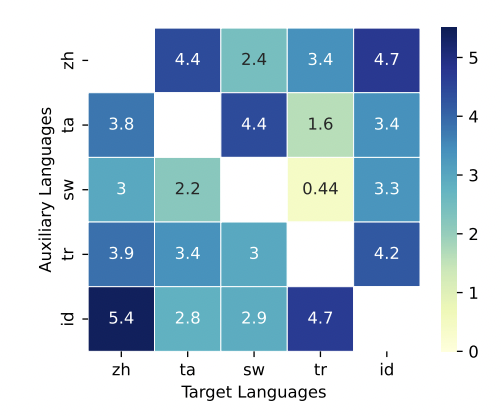

Meta-Learning For Multi-Modal Cross-lingual Transfer

Hanxu Hu and Frank Keller

MRL at EMNLP 2023 (Best Paper Nomination)

Code / arXiv

Service

- Reviewer: EMNLP 2023, NeurIPS 2024, NAACL 2025, ACL 2025, ICML 2025, COLM 2025

- Teaching Assistant: MLP Course 23-24 at University of Edinburgh, LLM Course 24Fall at University of Zurich

Acknowledgments

I am lucky to work with many enthusiastic, intelligent, and hardworking peers, such as Pinzhen Chen at UofEdinburgh, Simon Yu at NEU, Chenmien Tan at UofEdinburgh, Wenhao Zhu at Nanjing University, Zeyu Huang at UofEdinburgh. I learnt a lot from them.